- #SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS INSTALL#

- #SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS CODE#

- #SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS TV#

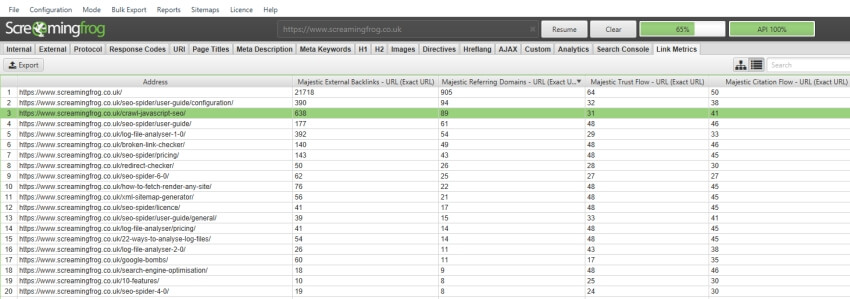

So, for any settings not mentioned, I suggest keeping the default settings. This isn’t a guide to every single setting in the tool, only the ones I change over default. I’ve added screenshots for many of the settings and coloured the ticks green where I tick boxes over the default settings. I’ll explain what I change over the default settings and also explain the reasoning behind my choices. The following is a guide on these settings. Having used Screaming Frog since its release in 2010, I’m pretty sure I’ve nailed probably the best settings for most site audits.

#SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS INSTALL#

The default settings applied on install aren’t sufficient for doing a full audit and need to be tweaked to get best results. Often missing issues due to the default settings. Having trained people on using Screaming Frog since 2010, I know that new users struggle to understand what the best settings are for doing audits. However, I haven’t seen a guide on what the best settings are for doing full site audits. There are some great guides on the different ways you can use it and the official site has a big amount of documentation on all the different settings, as there are a lot! What would we do with it! (Remember using Xenu?) However, this URL isn’t properly indexed by Google.Īnd – if you don’t believe that the screenshot above proves that Google isn’t always crawling JavaScript properly, let me show you one more example.Screaming Frog, my favourite, most used SEO Tool and I imagine the most used SEO tool in the industry.

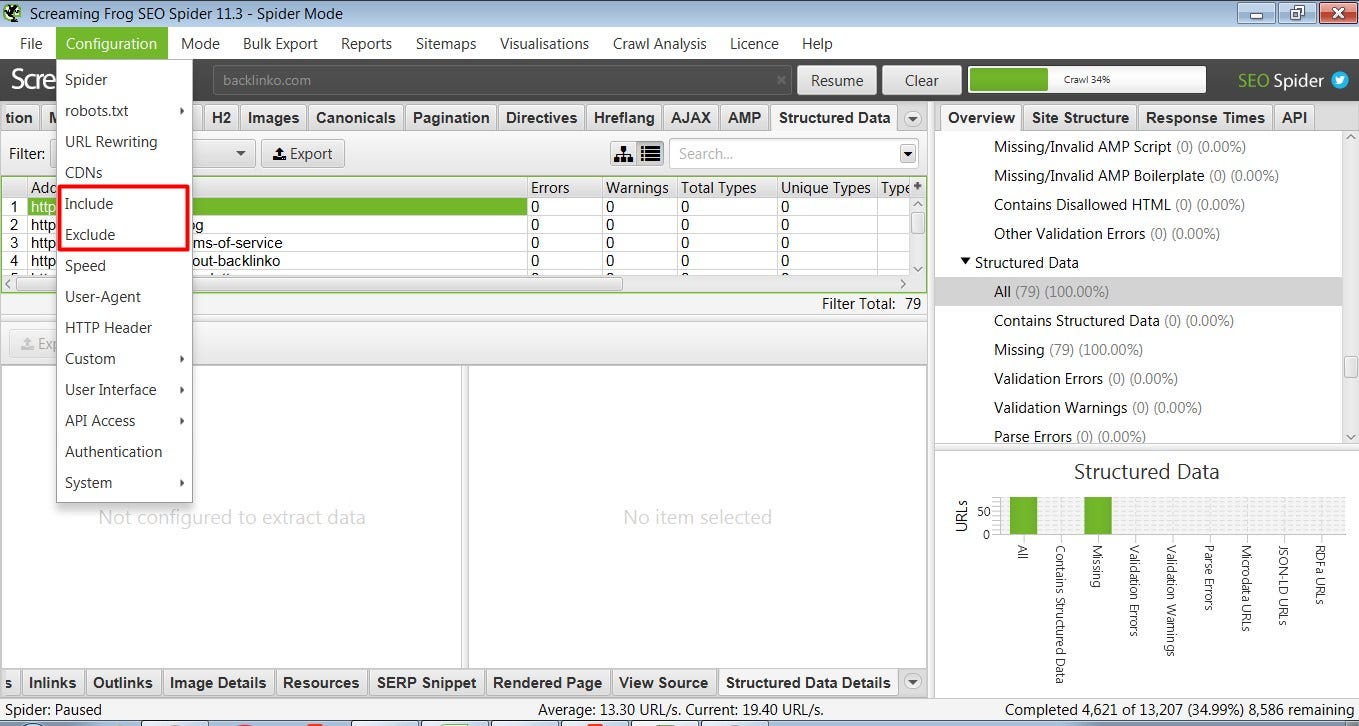

Let me show you an example – as you saw above, Screaming Frog properly crawled and rendered this URL. This is why so many JS websites are investing in prerendering services. However, Google doesn’t crawl JavaScript in the same way. Please keep in mind that the data you get from Screaming Frog is basically how correctly rendered JavaScript should look like. That’s – we are now successfully crawling JavaScript with Screaming Frog. If you already have Screaming Frog installed on your computer, all you have to do is go to Configuration → Spider → Rendering and select JavaScript and enable “Rendered Page Screen Shots.”Īfter setting this up, we can start crawling data and see each page rendered.

Few people know that, since version 6.0, Screaming Frog supports rendered crawling. The simplest possible way to start with JavaScript crawling is by using Screaming Frog SEO Spider.

#SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS CODE#

This is why crawling JavaScript websites without processing DOM, loading dynamic content, and rendering JavaScript is pointless.Īs you can see above, with JavaScript rendering disabled, crawlers can’t process a website’s code or content, and, therefore, the crawled data is useless. To see how the source code looked like before rendering, you need to use the “View Page Source” option.Īfter doing so, you can quickly notice that all the content you saw on the page isn’t actually present within the code. Instead, what you’ll see is DOM-processed & JavaScript Rendered code.īasically, what you see above is code “processed” by the browser. If you now use the right tools – e.g., the “Inspect code” feature in Google Chrome – you won’t see how it really appears.

#SCREAMING FROG SEO SPIDER HOW MANY COMPUTERS TV#

To make it even more specific, let’s have a look at the “Casual” TV show landing page –. This is why crawling JavaScript is often referred to as crawling using “headless browsers.” Crawling JavaScript websites without rendering or reading DOMīefore moving forward, let me show you an example of a JavaScript website that you all know.

The most straightforward tool we can use to see the rendered website is… a browser. Such a website has to be fully rendered, too, after loading and processing all the code. With JavaScript and dynamic content-based websites, a crawler must read and analyze the Document Object Model (DOM). With HTML (PHP, CSS, etc.) based websites, crawlers can “see” the website’s content by analyzing the code. However, to simplify this topic, let’s just say that it is all about computing power. The answer to this question is somewhat complex and could just as well be a separate article. Fortunately, there is more and more data, case studies, and tools to make this a little bit easier, even for technical SEO rookies. SEO for JavaScript websites is considered one of the most complicated fields of technical SEO.

0 kommentar(er)

0 kommentar(er)